I recently attended the 3rd Annual Acumen Users Conference held in Nashville, TN. On opening day I had the privilege of discussing the three CMS incentive programs that nephrologists face today. PQRS, meaningful use, and the physician value-based payment modifier are familiar topics to readers of this blog. Each has its own set of nuances for the practicing nephrologist, and over the years I have become intimately familiar with each one of these programs. While some may disagree with the underlying methodology employed in these programs, there is typically a reasonable explanation buried within the depths of the program framework.

Of late, I have found my attention drawn to the CMS Dialysis 5-Star rating program. While this program may not directly impact nephrologists in the way the three other incentive programs do, it is very clear you will be impacted and affected by the results of this remarkably ill-advised program. If you are the medical director of a dialysis facility, or perhaps a joint venture partner, or outright owner of a facility, you will be impacted by the results and by the casual interpretation of the 5 stars. If you provide care to dialysis patients, at some point in the not-too-distant future you may be faced with needing to explain this program to your patients and your community—a program that, in my view, simply defies logic.

Quality is not a zero-sum game

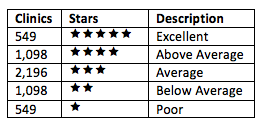

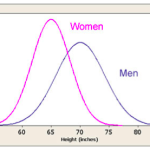

A couple of weeks ago I described one of the biggest concerns with the 5-Star program. Forcing every dialysis facility in the country into a normal distribution fundamentally assumes some providers are practicing excellent medicine and an equal number are failing. I have reproduced the consequences of assuming quality is a zero-sum game in the table below, using the number of clinics reported during a recent CMS presentation.

The methodology employed to generate the stars remains very troubling to the renal community. I am reminded of the cartoons I used to watch as a kid. I am sure you remember them as well, someone is smacked on the noggin and they immediately see stars swirling about their head. Spend a little time with this program and you, too, will be seeing stars.

Data integrity

As if forcing the bell curve was not a big enough problem, CMS also has a major problem with data integrity. The 5-Star rating system is effectively a roll up of seven individual measures:

- Standardized mortality ratio (SMR)

- Standardized hospitalization ratio (SHR)

- Standardized transfusion ratio (STrR)

- A measure of spKt/V

- A measure of hypercalcemia

- The percentage of patients with an AV fistula

- The percentage of patients dialyzing with a catheter for more than 90 days

During the Medicare Learning Network call on July 10 announcing the program, CMS reminded us there are 6,033 dialysis facilities in this country. Remarkably, however, almost 1 in 5 (1,130 dialysis facilities to be precise) were unable to report at least one of the seven measures outlined above. Undaunted, CMS created a “quick fix” of sorts, assigning an average score for missing data to 588 facilities and simply excluding another 542 facilities from the 5-Star program. Think about this for a moment. The programs that you and I are familiar with tend to penalize the absence of data. In fact, measure number four in the list above examines your dialysis facility’s ability to deliver a Kt/V greater than 1.2. Failing to report a patient’s Kt/V is treated as performance not met; in other words, it’s as though the patient’s Kt/V was less than 1.2, you are penalized for the absence of data. But fail to send any Kt/V data, and CMS will assume you are average.

Notice what happens when quality is assumed to be average in a zero-sum game. Assuming the absence of data is “average” impacts everyone else in the game. I gauranteee you assigning an average score for a measure in those 588 clinics caused someone else, someone who played by the rules and reported all of their data, to drop from 3 Stars to 2 Stars, or to drop from 2 Stars to 1 Star. Is this a rating system that can be relied upon for an “at a glance” assessment of quality by the general public?

Far more troubling than this is what we are beginning to uncover internally. Several of my colleagues at Fresenius have been looking at our 5-Star data file, and there are some serious data integrity issues. One of the things we have focused on is Kt/V. At first glance, we could not determine why the 5-Star data suggested we were not doing so well with dialysis adequacy, when in fact the lab results obtained in the clinics suggest otherwise. Last week an explanation began to emerge. Our preliminary review suggests a significant number of results did not make the trip to CMS. Some of the Kt/Vs measured and resulted appropriately to the clinics that were used to make treatment decisions on patients are not being counted by 5 Star. When we redo the math, this single data integrity issue appears to misclassify hundreds of dialysis clinics. If other providers are suffering from the same data integrity problem, the consequences could indeed be astronomical. A system that inappropriately assigns Stars will clearly mislead the intended audience.

Hypercalcemia

Notice measure number five on the list of measures above. That’s right, there are two lab-based measures among the lucky seven: spKt/V and hypercalcemia. Hypercalcemia for the purposes of 5 Star is defined as the percentage of patient months for which the patient’s 3-month-rolling-average uncorrected calcium exceeds 10.2 mg/dL. Think about this for a moment. Of all the labs we follow like a hawk in the dialysis world, we are being measured by hypercalcemia. Practicing nephrologists will be shocked when they learn that this measure is considered critical to overall patient care outcomes. Let’s see why.

The architects of the 5-Star program probably do not practice nephrology, but those of you who provide care to this remarkably vulnerable patient population are very familiar with the complexities of bone and mineral metabolism. At a minimum, it involves balancing the interaction of dietary phosphorus intake, compliance with phosphorus binders, dialysate calcium, diet, intravenous vitamin D analogues, and oral calcimimetics. From a laboratory perspective, this delicate balance is tracked with three tests, phosphorus, iPTH, and calcium. Ask any nephrologist which of the triumvirate is most important and the unequivocal answer is not going to be calcium. Yet the avoidance of hypercalcemia is on equal footing with many of the measures making up this program.

Flawed methodology

The really bad news about the calcium measure in my mind is that this measure carries more weight than SMR. It carries more weight than SHR. I am not kidding. In the world that is 5 Star, how you measure up with respect to hypercalcemia is valued more than STANDARDIZED MORTALITY RATIO! How is that possible??? The answer my friends is “Factor Analysis.” Analysts on the CMS call reported the use of factor analysis as a mechanism to ensure the methods employed in 5 Star were not double counting. Granted, I am not a statistics guru, but the statistical argument to include a lab test that most would rate as the least important of the typical bone and mineral metabolism triumvirate AND to rate it higher than SMR and SHR is beyond my comprehension.

Ponder for a moment the impact of shining a light on calcium as the proposed 5-Star plans does. Consider the potential unintended consequences of doing so. (1) Perhaps bone and mineral metabolism algorithms are being modified as I type this post. We are being measured by the presence of hypercalcimia. Will we see a rise in low calcium baths? Will we see a shift in the use of vitamin D analogues and calcimimetics? Will we see more parathyroidectomies? Will phosphorus values rise as we focus with laser-like intensity on the avoidance of hypercalcemia? It should not go unnoticed that this measure is by far the most actionable of the seven. What will be the unintended consequences of relying on factor analysis to assess the importance of a 5-star component measure?

Transparency

One of the driving forces behind 5 Star is transparency. How can we make it easier for patients to understand whether or not a dialysis clinic (or a hospital or a nursing home or a physician) is providing good care? Transparency is a wonderful idea, and one of which I am very much in favor. But you better be darn sure the data you are using to make the call is accurate and your methodology is sound and well understood, lest you find yourself in a position of misleading patients. In my view, 5 Star fails on both counts.

As a nephrologist, I am perhaps a better-informed “shopper” when it comes to selecting a dialysis facility. As I look at the seven measures in 5 Star, the one that would get my attention is SMR. We can argue about the validity of including this one in the calculation, but if it is in the mix, some indication of my longevity at the clinic would be very appealing to me.

I mentioned our ongoing internal review of the Fresenius data. Another troubling finding is the following observation: when you look at the SMR for the Fresenius facilities rated 1 Star, 95% of those clinics are within 1% of the SMRs for the Fresenius facilities with 3 Stars. Perhaps more appalling, when comparing the 1-Star SMRs with our 5-Star-rated clinics, almost half of the 1-Star clinics have an SMR within 1% of an SMR in our 5-Star facilities. And this program is supposed to help Medicare beneficiaries discern quality? Really? I guess the hypercalcemia measure is the one we should bet our stars on.

While we are on the topic of SMR, people who know a lot more about this subject than I do argue it’s inappropriate to use it in 5 Star. My colleague Dr. Eduardo “Jay-r” Lacson raised this very issue during the call on July 10. Jay-r could explain this far better than I can, but it goes something like this. The standardized ratios like SMR all come with a confidence interval. If my clinic’s SMR is reported as 1.02, the confidence interval could be 0.7 to 2.3. What this basically implies is “we don’t know what the actual mortality ratio is, but we are confident it’s somewhere between 0.7 and 2.3.” This is one of the principal reasons Dialysis Facility Compare reports mortality as expected, above expected, or below expected. Rolling the mean SMR up in the 5-Star calculation sweeps this very important fact under the rug.

Other valid criticisms of SMR include the fact that much of the standardization is derived from the 2728 form. Having personally completed many of these in my career, this fact alone gives me pause. And last but not least, including SMR has been criticized because it inappropriately lays every dialysis patient death at the feet of the clinic. If one of your patients meets his maker due to a close encounter with a milk truck, your 5-Star rating will suffer.

Seeing stars

I mentioned at the beginning of this post my experience with the CMS incentive programs and my evolving understanding of the intersection of those programs with the practice of nephrology. While I will admit each of those programs have their flaws, believe me when I say they are trivial compared to the 5-Star program issues. There are so many problems with this program it is hard to call one out. Forcing the bell curve because someone believes quality is a zero-sum game, grading clinics when 1 in 5 cannot report a full set of data, the potential Kt/V data integrity issue referenced above, weighting a measure of marginal clinical relevance because it emerged from the fog thanks to “factor analysis,” or rushing to push this out because we don’t want “perfect” to be the enemy of “good.” Each one is problematic, but mix them together and you have a recipe for disaster.

I have not met the architects of this program, but last week I had the opportunity to participate in a conference call with some of them. It is clear these are very intelligent people, and they are committed to making it easier for Medicare beneficiaries to understand quality. But I agree with the rest of the renal community, 5 Star widely misses the mark.

This was recently well articulated by Dr. Ed Jones. For those of you who do not know Ed, he is a hard working nephrologist. He takes care of these patients every day. He is a past president of the Renal Physicians Association and the current Chair of Kidney Care Partners, a national coalition representing the entire renal community. NN&I recently quoted Ed: “KCP supports efforts to increase transparency through the public reporting of quality data that provide meaningful information to empower patients. However, as designed, the ESRD Five-Star Program will lead to substantial confusion among patients and their loved ones, because it provides misleading and inaccurate information, which does not reflect the actual quality of care being provided and because the ratings are inconsistent with other quality scores being publicly reported by CMS.”

I wholeheartedly agree with Ed. At a minimum, this program will confuse our patients, but even worse, it will mislead them. The folks at the Center for Clinical Standards and Quality of CMS recognize the importance of data integrity to this program’s success. We are diligently working on validating the preliminary Kt/V findings I referenced earlier, along with other issues of data and counting integrity, and we will promptly share those findings with the folks at CMS when our analysis is complete.

The renal community is committed to supporting a program that accurately conveys quality in a manner our patients can understand. The 5-Star program as it stands today will mislead our patients. This creates a dangerous precedent and the resulting unintended consequences for our patients are unfathomable.

The conversation with CMS continues. I hope we can convince the powers that be to rethink the planned release in October. If not, many of you will soon be seeing stars as well. If there was ever a time for CMS to hear from you, that time is now. Join the conversation and let them know how you feel.

1. Werner RM, Asch DA. The unintended consequences of publicly reporting quality information. JAMA, 2005 293(10): 1239-44

Bill Peckham says

I think there is another problem with the data – at least based on my understanding from reading your very helpful posts – call it the geography problem.

For instance, I think the standardized hospitalization ratios reported in the Dialysis Facility Reports have expected outcomes in the context of their states. In the case of Dialysis Facility Compare the three buckets can accommodate a unit’s geography, whether the outcome was as expected or above/below expected, since the expectation takes in consideration the unit’s community level outcomes. If people in Mississippi generally are more likely to be hospitalized than people in South Dakota their respective SHRs takes that into account

How is this geographic variation accommodated under the Five Star program? Is the performance of units in Washington State and the District of Columbia reckoned against each other based on actual numbers, or are their hospitalization and mortality ratios adjusted so that every state has some 5 and some 1 star facilities? Are the fistula outcomes ranked directly against each other? or are they first filtered through a geographic lens?

If the program ranked all 6,000 units across the country directly, a bell curve of actual fistula usage or any of the measures, I think you would see geographic clumping in the data; states would be seen as preforming better or worse than other states. I would go on to hypothesize that this clumping would correlate to Medicaid reimbursement, but I digress, I don’t think geographic clumping is an outcome the designers of this program will allow. Instead I think they must be incorporating geographic variations into the math. Thus I believe this means there will 18 Five Star programs (based on the number of renal networks) or 56 (based on the number of US political entities) or some number in between and I anticipate that there will be an equal number of ways that this ends badly.

Terry Ketchersid, MD, MBA, VP, Clinical Health Information Management says

You make an excellent point Bill and in fact we see significant geographic variation within the Fresenius data. As I recall we saw this with Fistula First. Of interest, the nuts and bolts of the program basically rank all 7 individual measures nationally. Then they are grouped into 3 domains; one contains the 3 standardized ratios, one contains the 2 access measures and one contains the 2 lab measures. The measures within each domain are averaged and then the 3 domains are averaged. If you receive dialysis in a state or ESRD network that for some unexplained reason does not do well on one or more measures (perhaps due to regional patient demographic differences) the clinics in that region will suffer when compared with the nation. There are likely to be a few states where the majority of clinics are 1 or 2 Star clinics…not the most appropriate message for patients. Thank you for your thoughtful comment.